Model Context Protocol (MCP) Meets Chatsistant

How one open standard turns every Chatsistant agent into a full‑stack powerhouse

Originally published May 19th 2025

Anthropic’s Model Context Protocol (MCP) changes the story by defining a universal, LLM-native “USB-C port” for data and actions. Every compliant host—including Chatsistant—can now discover and call any MCP Server without bespoke glue code. Think of it as JSON-RPC wires plus AI-specific concepts (Tools, Resources, Prompts) that map 1-to-1 onto modern function-calling workflows.If you want the academic origin story, the seminal deep dive sits

here.

If you simply want to turn your Chatsistant agents loose on Git, Slack, Postgres, IoT sensors—or anything else—read on.

1 · Why the World Needed MCP

Before MCP, every AI platform devised its own plugin model. OpenAI had function calls, Microsoft pushed Semantic Kernel “skills,” SaaS vendors shipped proprietary chatbots, and developers lost weeks writing translation layers. Worse, each layer handled auth, throttling, and schema drift differently.

The pain points were acute:

- Silos – LLMs often hallucinated because they lacked live context.

- Duplication – Teams re-implemented the same Slack or GitHub wrapper.

- Security drift – Forty different plugins meant forty different ways to leak secrets.

By March 2025 the industry hit a tipping point when

OpenAI publicly adopted Anthropic’s spec. Suddenly Claude, GPT, Gemini, DeepSeek—all could rally around one open connector. That is the moment Chatsistant’s engineering team pivoted our roadmap: instead of fifty more bespoke “integrations,” we wired our Function-Calling Webhooks directly to MCP.

2 · MCP in Plain English

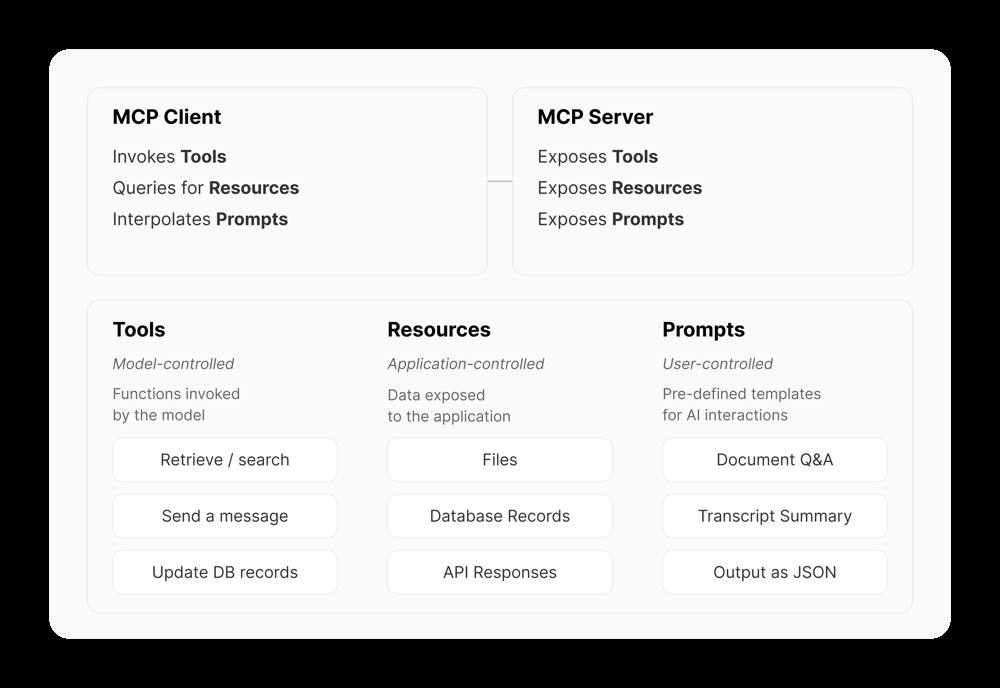

Technically MCP is a JSON-RPC 2.0 profile plus three LLM-centric nouns:

- Tool 🛠️: deterministic function the model may call (

search_code,send_email). Metadata includesname,description, and aninputSchema. - Resource 📄: read-only content the host can inject (

file://README.md,sensor://line2/temperature). Concept explainer here. - Prompt 📑: reusable template or workflow script. Spec here.

Above these concepts sits a classic Host ⇄ Client ⇄ Server pattern:

- Host Process – Chatsistant runtime where your LLM lives.

- MCP Client – lightweight adapter one-per-server, spawned by the host.

- MCP Server – wrapper exposing GitHub, SAP, spreadsheets, PLCs, etc.

Under the hood, MCP draws inspiration from Microsoft’s Language Server Protocol (LSP) and leverages JSON-RPC 2.0 for notifications, batching, asynchronous calls, and streaming.

3 · Nine Concrete Benefits

- Single Language – JSON-RPC across every tool (standardization).

- LLM-Native – Tools ↔ OpenAI function schemas, Resources ↔ RAG blobs, Prompts ↔ chain-of-thought guides.

- Context Continuity – Assistants carry breadcrumbs across Slack → Drive → SQL.

- Reusable Modules – GitHub MCP Server once, IDE / Chat / Agent share it.

- Vendor Neutral – Works with GPT-4o, Claude Sonnet 3.7, Gemini 2.0 Flash, DeepSeek V3.

- Two-Way Security – Servers stay behind your firewall; Hosts approve each state change.

- Live Streams –

resources/subscribepushes sensor data or Slack DMs (docs). - Community Velocity – Microsoft’s Azure preview proves hyperscaler support (link).

- Proven Foundations – Inspired by LSP and built on JSON-RPC 2.0, MCP inherits a battle-tested communication layer for async, streaming, and notifications.

4 · How Chatsistant Embeds MCP (No Separate “Integrations” Tab Needed)

Chatsistant’s Agent Function-Calling Webhooks double as an MCP Client. The handshake looks like:

# MCP Client (inside Chatsistant runtime)

POST https://app.chatsistant.com/webhooks/{agent_id}

Headers:

X-MCP-Token: <random secret>

# Example JSON-RPC request forwarded to that URL

{ "jsonrpc":"2.0","id":1,"method":"tools/list","params":{} }

Chatsistant automatically:

- Converts

tools/listinto per-model function schemas. - Executes

tools/callwith RBAC, tracing, and retries. - Streams

resultpayloads back to the conversation. - Logs every interaction for audit & usage analytics.

Supported Transports

We accept stdio pipes for co-located servers or HTTPS + SSE/WebSocket for remote. Underneath the wire format is always the exact same JSON.

5 · Five End-to-End Scenarios

A. Finance Ops Bot

- Accounting MCP Server exposes

get_latest_invoice&email_pdf. - GPT-4o calls first tool, receives PDF.

- Claude Haiku calls second tool with CFO’s email → done.

B. Dev Copilot

GitHub Server → search_code, create_branch, open_issue. Gemini 1.5 Pro plans the fix, DeepSeek R1 writes code, GPT-o3 mini opens the issue. All via MCP.

C. Smart Factory Dashboard

PLC Server Resources: sensor://line3/vibration; Tools: set_speed(line,rpm). Alert thresholds stream into a Chatsistant channel; a human approves speed changes.

D. Legal Summarizer

Google Drive Server lists new contracts as Resources, Prompts include “Summarize contract risks”. Teams lawyers accept or reject edits—all tracked by the same Server.

E. Personal Automation

HomeAssistant Server wraps smart lights, thermostats, calendars. Chatsistant agent uses DeepSeek V3 for voice ↔ action mapping; Tools like turn_on_light(room).

6 · Building an MCP Server (Python Example)

pip install fastmcp

from fastmcp import FastMCP

srv = FastMCP(server_name="Weather Server")

@srv.tool()

def forecast(city:str, days:int=1) -> dict:

"""Return a daily forecast."""

return call_weather_api(city, days)

@srv.resource("weather://{city}/{date}")

def cached(city:str, date:str) -> str:

return db_get(city, date)

if __name__ == "__main__":

srv.serve_http(port=5001)

SDKs in TypeScript, Java, Kotlin, C# live at

github.com/modelcontextprotocol.

Server Security Check-list

- Store secrets in env vars; never in code.

- Rate-limit externally facing endpoints.

- Implement allow-lists on

resources/readURIs. - Log

tools/callparameters for audit.

7 · Wiring a Chatsistant Agent to Your Server

- Create/open an agent at app.chatsistant.com.

- Enable Function Calling under “Agent Settings”.

- Copy the webhook URL + token.

- Launch your MCP Server (local or cloud).

- Point an MCP Client at the webhook.

- Open the chat; you’ll see

tools/list&resources/listhits in logs.

Step-by-step screenshots mirror the

External Data RAG guide

and

API-Key setup.

8 · MCP Versus Other Integration Schemes

OpenAI Function Calling

Fantastic for one-shot tool calls but proprietary and stateless. MCP sits under function-calling: the model emits a call → Chatsistant translates → MCP Server executes → result returns → model continues.

Microsoft Semantic Kernel

SK orchestrates “skills” and “plans” inside .NET or Python. Historically each skill wrapped its own API. SK now offers an MCP connector so skills can simply be thin wrappers around MCP tools—centralizing schema & auth.

LangChain Tools

LangChain Tools are in-process Python functions. By adding a small adapter you can import every remote MCP Tool as a LangChain Tool. Benefit: zero re-write when you move from a notebook to production chat.

9 · Ideal Use-Case Profiles

| Domain | Why MCP Shines |

|---|---|

| Autonomous Research Agents | Fetch papers → parse → store highlights → draft summary, all via reusable tools. |

| IDE Copilots | Code search, test runner, CI triggers exposed as Tools. |

| Enterprise RAG Chatbots | Live Resource reads ensure answers are never stale. |

| BI & Analytics | SQL query → chart → schedule export with one standard connector. |

| Smart Home / Factory | Streams + state-change Tools behind local firewall. |

10 · The Road Ahead

MCP is rapidly becoming the lingua franca for tool-using AI. Chatsistant’s next milestones:

- Visual Graph Inspector – real-time trace of every Tool call & Resource pull.

- One-Click Marketplace – Slack, HubSpot, Stripe, SAP servers published by the community.

- Streaming Dashboards – push

resources/subscribedata into embed-ready charts.

USB-C unified hardware; MCP is poised to unify AI integrations. Chatsistant’s native support means you can build a connector once and unleash it across GPT-4o, Claude Sonnet, Gemini Flash, or whichever frontier model lands next. Less plumbing, more product.

Appendix A · Live Streams & Subscriptions

MCP’s resources/subscribe endpoint lets servers push updates to the host—ideal for chatrooms, IoT telemetry, or stock-ticker agents.

// client → server

{ "jsonrpc":"2.0","id":9,"method":"resources/subscribe",

"params":{"uris":["sensor://line2/temp"]} }

// server → client (notification)

{ "jsonrpc":"2.0","method":"resources/event",

"params":{"uri":"sensor://line2/temp","content":"92.1","ts":"2025-05-13T18:09:21Z"} }

Chatsistant streams these notifications into hidden assistant messages. Toggle Surface resource events in Agent ⚙ Settings for user-visible alerts.

Appendix B · Transport Matrix

| Transport | When to Use | Chatsistant Support |

|---|---|---|

| Stdio (pipe) | Local sibling processes (IDE plugins). | ✔ auto-spawn via CLI wrapper |

| HTTP + SSE | Serverless/container endpoints; firewall-friendly. | ✔ recommended default |

| WebSocket | High-throughput, bidirectional bursts. | β (feature flag) |

| Unix Domain Socket | Low-latency co-located micro-VMs. | Soon™ |

Appendix C · Performance Tuning Cheatsheet

- Batched calls: group

tools/callinto multi-id JSON-RPC batches to shave RTT. - Incremental Resources: expose large docs via

rangeparam (offset+length) to avoid token bloat. - Model selection: route “metadata fetch” to GPT-o3 mini, heavy reasoning to GPT-4o—Chatsistant’s Model Router handles this dynamically.

Appendix D · Security Hardening Checklist

- Pin

X-MCP-Tokento least-privilege secret per agent. - Whitelist CIDR if MCP Server is HTTP-facing.

- For state-changing Tools, require a second user confirmation hook (

requiresApproval). - Enable

audit=trueflag to mirror all JSON-RPC to your SIEM.

Appendix E · CI & Deployment Patterns

Local Dev → GitHub CI → Docker → Fly.io. Example Dockerfile for a Python Server:

FROM python:3.11-slim WORKDIR /app COPY . . RUN pip install --no-cache-dir fastmcp uvicorn CMD ["python","weather_server.py","--http","0.0.0.0:8000"]

Appendix F · Debugging & Observability

Chatsistant surfaces an MCP Trace panel:

- Timeline view 🕑 – each Tool/Resource call with duration.

- Token diff 🔍 – shows exact text inserted into the model after a call.

- Error drill-down 🚨 – links JSON-RPC error to server stderr for fast fixes.

For on-prem logs, set MCP_LOG_LEVEL=debug and tail stdout.

You now have everything—from the elevator pitch to wire-level bytes—to put MCP & Chatsistant to work in production.

TL;DR & Easy-to-Digest Friendly Summary

New to MCP and Chatsistant? Here’s the quick breakdown:

- What is MCP?

A universal “wire” (JSON-RPC profile) plus three AI building blocks:- Tools – functions the AI can call (e.g.,

search_code). - Resources – read-only data the AI can fetch (e.g.,

file://report.pdf). - Prompts – reusable templates to guide the AI (e.g., “Summarize this doc”).

- Tools – functions the AI can call (e.g.,

- Why use it?

One standard for every connector → no more custom glue code per service → faster, safer, reusable. - How Chatsistant uses it?

Chatsistant’s Function-Calling Webhooks act as an MCP Client. You point your MCP Server (Git, Slack, Postgres…) at your agent’s webhook URL and token, and Chatsistant handles the rest. - Concrete Example

Scenario: You want your AI to fetch today’s sales from a Postgres database.

Steps:- Run a Postgres MCP Server exposing

get_sales(date). - In Chatsistant, enable Function Calling & paste the webhook URL + token.

- Ask your agent: “What were sales on 2025-05-13?”

- The agent calls

get_sales("2025-05-13"), the server returns the number, and the AI tells you:

“Sales on May 13 were $42,350.”

- Run a Postgres MCP Server exposing

That’s it: MCP gives your AI live access to any system, and Chatsistant makes it effortless to wire up.